Learning about Racism and Algorithms in Criminal Data Analytics

Tekst Storyboardowy

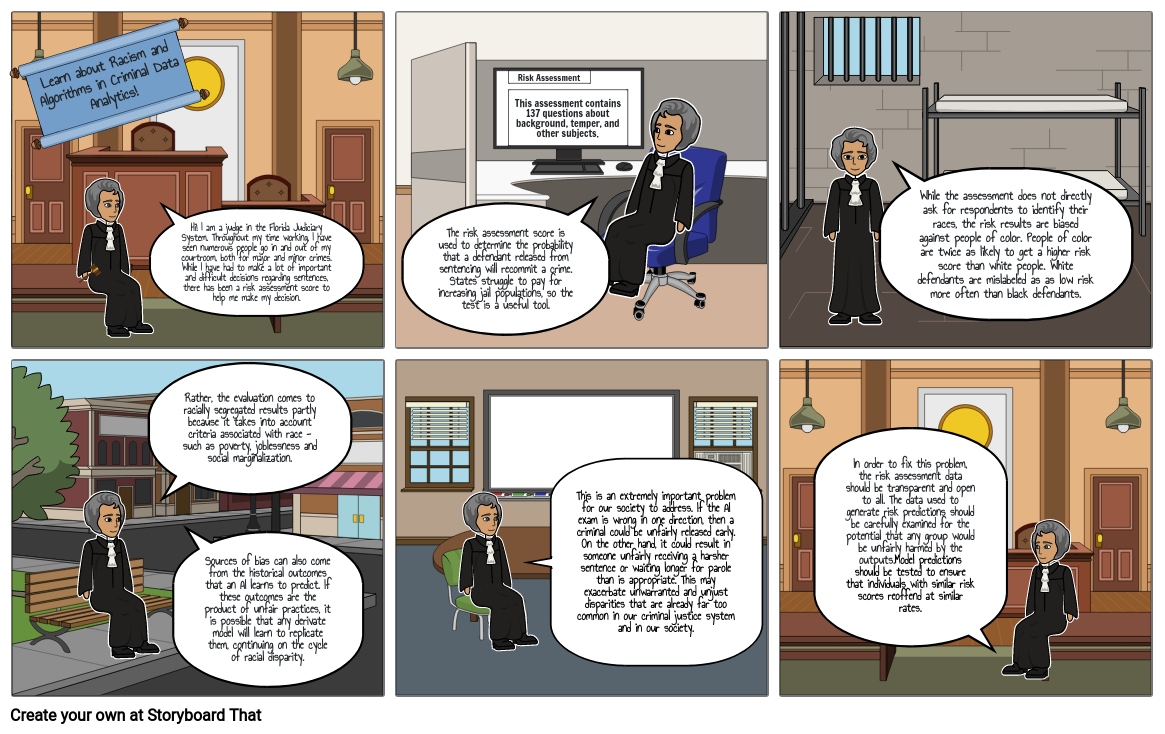

- Learn about Racism and Algorithms in Criminal Data Analytics!

- Hi! I am a judge in the Florida Judiciary System. Throughout my time working, I have seen numerous people go in and out of my courtroom, both for major and minor crimes. While I have had to make a lot of important and difficult decisions regarding sentences, there has been a risk assessment score to help me make my decision.

- The risk assessment score is used to determine the probability that a defendant released from sentencing will recommit a crime. States struggle to pay for increasing jail populations, so the test is a useful tool.

- Risk Assessment This assessment contains 137 questions about background, temper, and other subjects.

- While the assessment does not directly ask for respondents to identify their races, the risk results are biased against people of color. People of color are twice as likely to get a higher risk score than white people. White defendants are mislabeled as as low risk more often than black defendants.

- Rather, the evaluation comes to racially segregated results partly because it takes into account criteria associated with race - such as poverty, joblessness and social marginalization.

- Sources of bias can also come from the historical outcomes that an AI learns to predict. If these outcomes are the product of unfair practices, it is possible that any derivate model will learn to replicate them, continuing on the cycle of racial disparity.

- This is an extremely important problem for our society to address. If the AI exam is wrong in one direction, then a criminal could be unfairly released early. On the other hand, it could result in someone unfairly receiving a harsher sentence or waiting longer for parole than is appropriate. This may exacerbate unwarranted and unjust disparities that are already far too common in our criminal justice system and in our society.

- In order to fix this problem, the risk assessment data should be transparent and open to all. The data used to generate risk predictions should be carefully examined for the potential that any group would be unfairly harmed by the outputs.Model predictions should be tested to ensure that individuals with similar risk scores reoffend at similar rates.

Utworzono ponad 30 milionów scenorysów