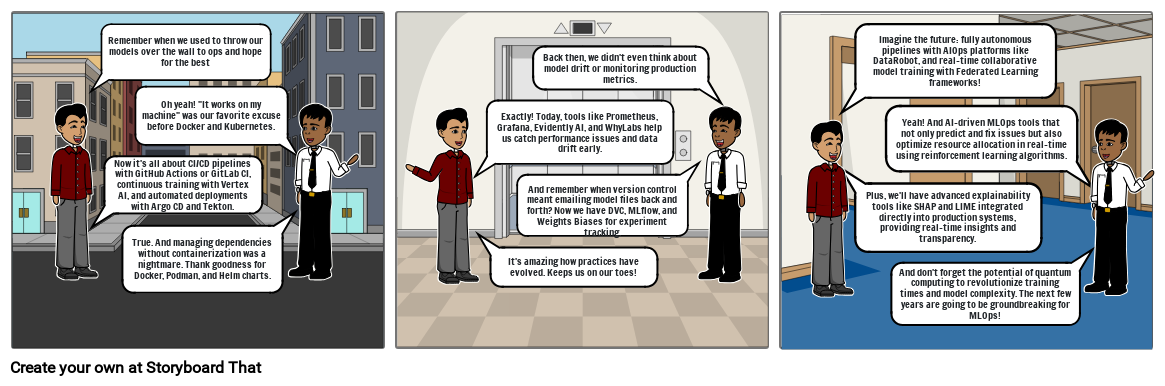

MLOps - Through the ages

Siužetinės Linijos Tekstas

- Skaidrė: 1

- Remember when we used to throw our models over the wall to ops and hope for the best

- Now it's all about CI/CD pipelines with GitHub Actions or GitLab CI, continuous training with Vertex AI, and automated deployments with Argo CD and Tekton.

- True. And managing dependencies without containerization was a nightmare. Thank goodness for Docker, Podman, and Helm charts.

- Skaidrė: 2

- Back then, we didn't even think about model drift or monitoring production metrics.

- Exactly! Today, tools like Prometheus, Grafana, Evidently AI, and WhyLabs help us catch performance issues and data drift early.

- And remember when version control meant emailing model files back and forth? Now we have DVC, MLflow, and Weights Biases for experiment tracking.

- It's amazing how practices have evolved. Keeps us on our toes!

- Skaidrė: 3

- Imagine the future: fully autonomous pipelines with AIOps platforms like DataRobot, and real-time collaborative model training with Federated Learning frameworks!

- Yeah! And AI-driven MLOps tools that not only predict and fix issues but also optimize resource allocation in real-time using reinforcement learning algorithms.

- Plus, we'll have advanced explainability tools like SHAP and LIME integrated directly into production systems, providing real-time insights and transparency.

- And don't forget the potential of quantum computing to revolutionize training times and model complexity. The next few years are going to be groundbreaking for MLOps!

- Skaidrė: 0

- Oh yeah! "It works on my machine" was our favorite excuse before Docker and Kubernetes.

Sukurta daugiau nei 30 milijonų siužetinių lentelių